Flexible typing in a static world

Sometimes, we see a heated debate about the benefits of static vs. dynamic typing. The debate becomes heated because IMHO there’s an unspoken assumption you can’t compromise. You have to take a side. The types are either fixed or they aren’t. Nowadays, we see a lot of examples that erode such view. Optional typing, type inference, and more powerful type are language features that help design better APIs. But what to do if you’re constrained by a less flexible language?

I need to integrate Java and Python code together and because a task queue is a simpler solution to manage than direct-call microservices, I’ve decided to port Celery library to Java.

Quoting from Celery Python library website:

Celery is an asynchronous task queue/job queue based on distributed message passing. It is focused on real-time operation, but supports scheduling as well.

The execution units, called tasks, are executed concurrently on a single or more worker servers using multiprocessing, Eventlet, or gevent. Tasks can execute asynchronously (in the background) or synchronously (wait until ready).

Celery is used in production systems to process millions of tasks a day.

I’ll share more about the architecture, the use case and the motivations in a later post. Today, I’ll write about an approach to integrate dynamic, flexible APIs of Python into the static, rigid Java world. You can find the Java library at crabhi.github.io/celery-java.

How Celery works in Python

It’s quite natural to use Celery in Python. You write a function that does useful stuff. Let’s say it resizes images. You can call the function directly. But if you’re handling a web request, you don’t want to block the whole process when the function computes. You’d rather offload the task to some worker running maybe on a different machine. Maybe there’re multiple workers. You don’t care. You just want to be sure the task computes so you can acknowledge it to your user.

Enter Celery and a message queue. Celery lets you submit a task to the message queue, run a worker elsewhere that picks it up and optionally return the result back in a RPC (remote procedure call) style. This is the example from First Steps with Celery:

-

Define the task.

# tasks.py app = celery.Celery('tasks', broker='amqp://rabbit//', backend='rpc://rabbit//') @app.task() def add(x, y): return x + y -

Run your worker. The worker needs to connect to the queue. Nobody connects to the worker. You can run it in a new virtual machine and don’t care about addressing.

$ celery -A tasks worker -------------- celery@yourhost v4.1.0 (latentcall) ---- **** ----- --- * *** * -- Linux-4.13.0-16-lowlatency-x86_64-with-Ubuntu-17.10-artful 2017-11-20 20:03:10 -- * - **** --- - ** ---------- [config] - ** ---------- .> app: tasks:0xdeadbeef - ** ---------- .> transport: amqp://rabbit:5672// - ** ---------- .> results: rpc:// - *** --- * --- .> concurrency: 8 (prefork) -- ******* ---- .> task events: OFF (enable -E to monitor tasks in this worker) --- ***** ----- -------------- [queues] .> celery exchange=celery(direct) key=celery -

Call the function remotely.

task_result = add.delay(4, 2) # later, you can pick up the result print(task_result.get()) # prints 6

This API is quite ergonomic because it allows the user to add the RPC functionality to any

of his functions. On the other hand, it doesn’t hide the fact that the calls are remote.

You call them in a slightly different manner (.delay(...)) and they return a wrapper

object that represents a not yet computed value.

This is possible thanks to Python being quite lax about its types.

- The functions are objects.

- You can add arbitrary attributes to most objects in Python.

- Types of the variables are not statically defined. The annotation

@app.taskmay create a completely new function.

Porting to Java

In Java, you’re much more limited. The methods don’t stand alone. The lambda in Java 8 is a syntactic sugar at compile time.

Let’s say we have a task method to add numbers in Java.

class Tasks {

public int add(int x, int y) {

return x + y;

}

}

Now, how to call the method remotely? You could call it dynamically:

Future<Object> result = celery.delayTask(

Tasks.class, "add", new Object[]{ 1, 2 }

);

Integer sum = (Integer) result.get();

println(sum);

This doesn’t look very nice. The method name is a string and won’t be found by the tooling if we want to change it. And there’s no way to implement calling tasks with keyword arguments from Python (unless you want to rely on debug symbols being present).

Java lets you create a dynamic proxy class to intercept the calls and modify the behaviour. Perhaps we could dynamically implement a proxy that doesn’t call the method directly but rather sends the message to the queue? Unfortunately, the method has also a typed return value. If we wanted to respect this interface, we’d have to always block before returning.

If we can’t solve our problem at runtime, maybe we can do it at compile time!

Generating code

What if the client interface looked like this?

Future<Integer> result = new TasksProxy(celery).add(1, 2);

The equivalent of Python’s fluidity in Java is code generation. We can wrap the dynamic

delayTask in a statically typed method that ensures we don’t get runtime errors when

casting the types or when the name of the method changes. This is the way I decided to go

with the celery-java library.

// This class is entirely generated.

class TasksProxy {

// constructor not shown

Future<Integer> add(int x, int y) {

return (Future<Integer>) celery.delayTask(

Tasks.class, "add", new Object[]{x, y}

);

}

}

If you compile with Maven, the generated code ends up in

target/generated-sources/annotations/. You can inspect it, it can have Javadocs etc.

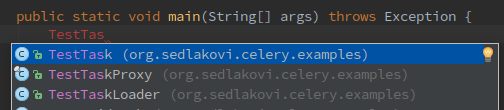

More importantly, the IDEs like IntelliJ Idea or NetBeans play well with the generated

classes. They offer them for autocompletion almost instantly after you use the annotation

that causes new code to be generated.

Unfortunately, it’s not as straightforward to generate code from annotations in Java as when you create an annotation in Python. That said, it’s not that hard. There’s a three part series of articles describing how to generate code from annotations (part 1, 2, 3). I think there’s a room for a framework or a language feature that would make such tasks easier.

You can look at the sources of celery-java. There’s

a TaskProxy template that gets rendered by the

TaskProcessor that gets triggered by the @Task annotation.

This is for the client code that submits the tasks. There’s a similar support for loading the task code in a worker. You just need to put your task class on classpath and the worker can process messages intended for it.

In the end, you’re able to provide your API users with similar ergonomics as with dynamic languages but with greater safety.

filip at sedlakovi.org - @koraalkrabba

Filip helps companies with a lean infrastructure to test their prototypes but also with scaling issues like high availability, cost optimization and performance tuning. He co-founded NeuronSW, an AI startup.

If you agree with the post, disagree, or just want to say hi, drop me a line: filip at sedlakovi.org