Reliably connecting Raspberry Pi to Internet — part 2

In part 1, we’ve seen how to connect Rasbperry Pi to Internet over multiple connections with different priorities. It also allowed us to create a WiFi hotspot so we could connect to the device when physically close to it.

Internet of Things management platform

We’ve evaluated several IoT management platforms, from which I’ll pick one most notable - Resin.io - that’s actually quite neat. It nicely splits the concerns of the base OS - security updates, connectivity, code deployment from the space for your application. Your application runs in a Docker container so it’s harder to unintentionally brick or disconnect the device.

As a business decision, the company decided we wanted full control over the stack so we decided to roll our own minimalistic platform that does much less than the existing solutions. What we’ve come up with is quite composable and every component can be switched to something better when needed.

How do we actually connect to the device?

During the build of our image, we install openssh-server and generate a unique set of SSH keys. The device stores its private host key in /etc/ssh and a public part of a client key in /home/our_interactive_user/.ssh/authorized_keys. The opposite parts of these keys are stored in our device registry.

Anybody with access to this registry can connect securely to the devices. Because we store host public keys, we never have to answer the annoying and unsafe SSH question about unknown host.

All the devices run Avahi. That means they broadcast their hostnames and IP addreses. It’s a sort of auto-configuring DNS. If you’re on the same network, you can reach you device via ssh user@device.local. It surprised me this setup worked actually quite well. It just needs each device to have unique hostname.

How to update all devices at once?

With Ansible, you can run any ssh commands or more complicated scripts (called plays) in parallel across your fleet. This command would distribute a file to all your devices at once, back up original version on the device and save hosts for which it failed.

ansible --become -m copy -a "src=device-hosts dest=/etc/host backup=yes" iotdevices

We happen to implement the device registry as a folder committed to git. Our git hosting access rights controls who can access the fleet. There’s a custom Ansible inventory script that goes over the contents of this folder and parses the settings for each device. If needed, this registry could be swapped for a centralized system with fine grained permissions.

The folder structure then looks like

inventory

├── device-1 # host name of the device

│ ├── access.key # secret key to access the device

│ ├── _config.yml # device-specific variables (group membership and config)

│ └── hostkeys.pub # device public host keys to prevent Man in the middle

└── device-2

├── access.key

└── hostkeys.pub

Ansible allows you to specify a script as an inventory. The script can go through the folders and tell Ansible about the configured machines. The simplest inventory script could look like this

#!/usr/bin/env python3

import json

import os

all_hosts = {}

for host in os.listdir('inventory'):

all_hosts[host] = {

'ansible_ssh_private_key_file': 'inventory/{}/access.key'.format(host),

'ansible_ssh_user': 'our_interactive_user',

'ansible_ssh_common_args': '-o UserKnownHostsFile=inventory/{}/hostkeys.pub'.format(host),

'ansible_ssh_host': '{}.local'.format(host)

}

print(json.dumps({

'group': {

'hosts': list(all_hosts.keys()),

},

'_meta': {

'hostvars': all_hosts,

}

}))

Being arbitrary executable, the script can do much more. Set up correct key permissions, generate ~/.ssh/config entries, and process other logic. It also could fetch all the data from an external registry service.

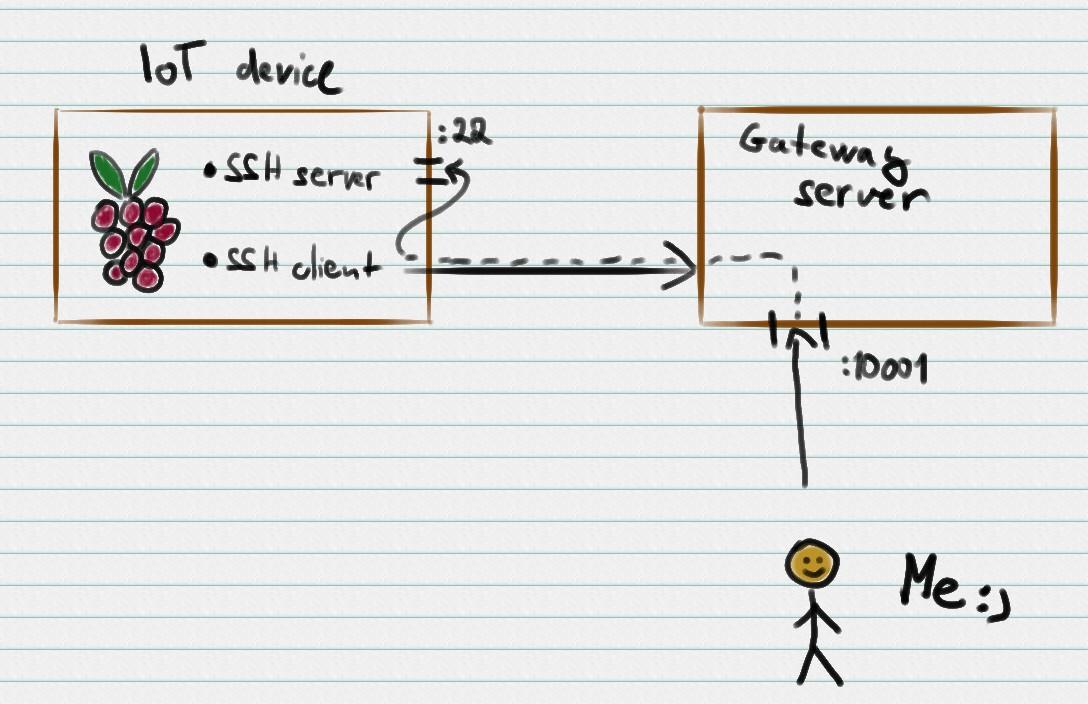

What if I’m far away from the device?

That works fine in the office but my devices in the wild are connecting via modems and end up behind mobile carrier’s NATs. We need some server with public IP address that will proxy the traffic behind two NATed computers (mine and the device).

A good choice is to create a VPN. With OpenVPN’s client-config-dir (manpage), you can specify static addresses for the clients and set up these addresses into your internal DNS server. I can’t 100 % vouch for this setup because I haven’t tested it. Mostly because I missed the client-config-dir option.

Instead, I used a little trick with an OpenSSH server. All our devices have serial number in their hostname. We parse this name and compute a port number that the gateway server forwards to the device. The device connects via SSH to the gateway server and requests forwarding of its port back. So for example, device-1 gets port 10001 on the gateway server. When connecting to gateway-server:10001, the connection piggybacks on the SSH connection initiated by the device and reaches the device’s local SSH server.

In order to connect from Ansible via this gateway, we just need to add these hosts variables to the inventory script. The host keys and authentication remains the same as when connecting locally.

# If the device is named like device-1, connect to port 10001...

'ansible_ssh_port': 10000 + int(host.split('-')[-1]),

# ... on gateway server.

'ansible_ssh_host': 'gateway-server.in.your.network',

On the gateway server, we create a separate user for each device (with shell set to /bin/false) and assign it a unique authorized key. In each device’s folder, we have a new file - sshtunnel.key used by the device to authenticate to the server. For example, device-4 connects to sshtunnel-device-4@gateway-server.example.com. This is all managed by an Ansible playbook that looks into the device registry directory and sets up the server users and keys.

The server SSHd config requires GatewayPorts yes and a firewall preventing unauthorized access to these ports. Also, you need to set up keepalive packets on both device and the server (ServerAliveInterval, ClientAliveInterval). That allows ssh to detect when the connection drops. In case of the server, it cleans up the port for new connection from the device. And in case of the client, it terminates ssh, signaling Systemd to restart it.

Example of the server config (/etc/ssh/sshd_config):

PermitRootLogin no

PasswordAuthentication no

GatewayPorts yes

ClientAliveInterval 15

UseDNS no # speeds up connection establishment

Example of the client options (let’s store them at /etc/sshtunnel/config):

Host gateway-server

HostName gateway-server.example.com

User sshtunnel-{{ ansible_hostname }}

ServerAliveInterval 15

UserKnownHostsFile /etc/sshtunnel/gateway-server.pub

HashKnownHosts no

# Without forwarding, the connection is useless

ExitOnForwardFailure yes

RemoteForward *:{{ port }} localhost:22

The service file then looks like this:

[Unit]

Description=SSH tunnel

After=network-online.target

Wants=network-online.target

[Service]

ExecStart=/usr/bin/ssh -F /etc/sshtunnel/config gateway-server

Restart=always

RestartSec=5

User=nobody

Group=nogroup

[Install]

WantedBy=multi-user.target

Don’t use this solution :-)

This solution is cheap, works fine in practice and it was fun to design. But it has all the drawbacks of custom solutions. Most notably you will have to maintain it by yourself and explain it to all new team members. Make sure you really have a strong need before passing on the existing solutions (like Resin).

Edit: Follows part 3 in which you’ll see how to automate installation of your fleet of devices.

filip at sedlakovi.org - @koraalkrabba

Filip helps companies with a lean infrastructure to test their prototypes but also with scaling issues like high availability, cost optimization and performance tuning. He co-founded NeuronSW, an AI startup.

If you agree with the post, disagree, or just want to say hi, drop me a line: filip at sedlakovi.org